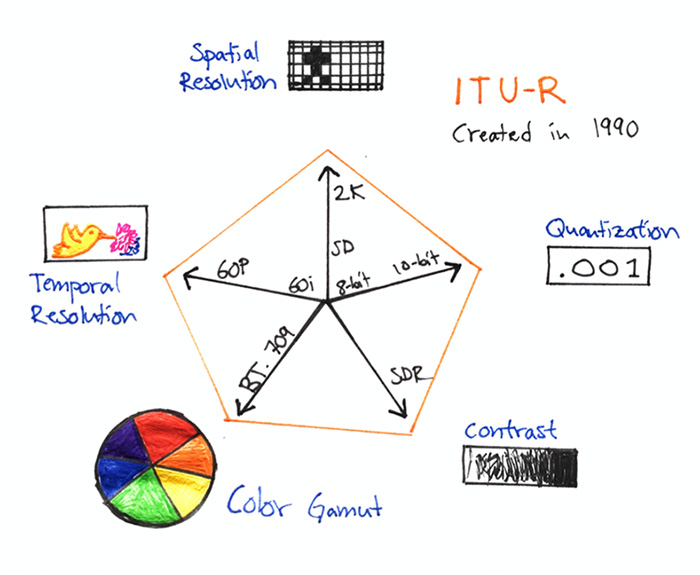

The 5 key parameters of digital imaging technology

HDR, or High Dynamic Range video is a fairly new technology which only been around commercially for a couple years. High Dynamic Range video should not be confused with HDR photography; which is a different technology. To understand HDR better, we need to start with looking at the 5 key parameters of digital image technology, which is; temporal resolution, spatial resolution, quantization, contrast and color gamut.

These 5 parameters can be divided into of either having quantitative properties, or qualitative properties; meaning that they contribute to either to the amount of pixels in an image, or the quality of the pixels. The technology of HDR relates primarily to the latter.

Quantitative properties

The quantitative properties are quite straight forward and easy to describe, and it’s all about resolution. Spatial resolution regards the amount of pixels, and is described by the amount of horizontal and vertical rows of pixels, for example; 1920x1080 (for Full-HD) or 3840x2160 (for UHD 4K). Temporal resolution refers to the frequency or refresh rate of a video signal, referred to as Frames Per Second, or FPS. 24 FPS is common in cinema, although we have seen examples of higher frame rates (for example in the 3D HFR-versions of The Hobbit). Raising the spatial and temporal resolutions to higher values will simply put result in more pixels more often. Finally, when you see attributes such as 1080p30, then it refers strictly to resolution, both spatial and temporal.

Certain standards might specify specific resolutions for HDR, but HDR as a technology does not directly relate to these quantitative properties. And, it is possible to create HDR-video in different temporal and spatial resolutions.

Qualitative properties

If higher quantitative values are described as more pixels more often, then; higher qualitative values can be described as a larger volume of possible pixel brightness and color values with finer numerical gradation, let's find out why.

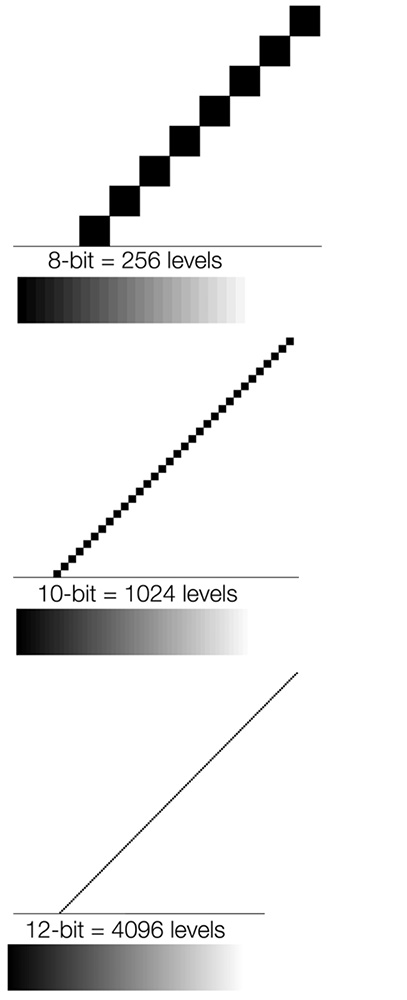

Quantization

The finer numerical gradation part refers to quantization; which is the process of converting continuously varying analog voltages into a series of numerical values called samples. 8-bit means that the signal will be quantized to 2 to the 8th power (2^8), or 256 code values. In the world of RGB-color, this means 256 shades of red, 256 shades of green, and 256 shades of blue. The red, green, and blue color palettes are then multiplied to define the entire color palette (256 × 256 × 256) of 16,7 million colors. 16,777,216 -(defined as 8-bit color in the digital realm). Even if 16,7 million colors intuitively might seem like a lot, it’s actually quite limiting; and can often times result in banding artifacts over areas with fine gradation, such as a sky. Since 8-bits results in 256 code values per channel – there’s also only 256 code values between black and white; where all three channels are used in equal amount to generate the different shades of grey. To put this into perspective; 256 shades of grey in 8-bit can be compared with 1024 in 10-bit or 4096 in 12-bit. Quantizing is also relevant to discuss in relation to the parameters of contrast and color gamut; since the actual difference in brightness and hue between the code values (how large the steps are) will vary depending of the overall tonal range and gamut size.

Contrast

The contrast, or dynamic range of a TV refers to its luminance, the maximum and minimum amount of light the TV is capable of producing. While luminance is measured in candela per square meter (cd/m²), commonly referred to as nits, dynamic range is presented as a ratio between the brightest and darkest values a display can produce (for example 1500:1). The maximum brightness of Standard Dynamic Range (or SDR) is 100 nits, which stems from old CRT-technology. Contrast, dynamic range, or tonal range is the parameter that directly relates to HDR; which increases the maximum brightness level several times, theoretically up to 10’000 nits with Dolby Vision. This allows for a much greater highlight differentiation, and a less flat image. Another possibility is to work with a variable black and white level, also known as tonal ranging. Here you can create a big temporal contrast when going from a bright scene into a dark scene. This might have the effect that the viewers eyes have to adjust from the brightness to the darkness, creating extra tension to complement the story.

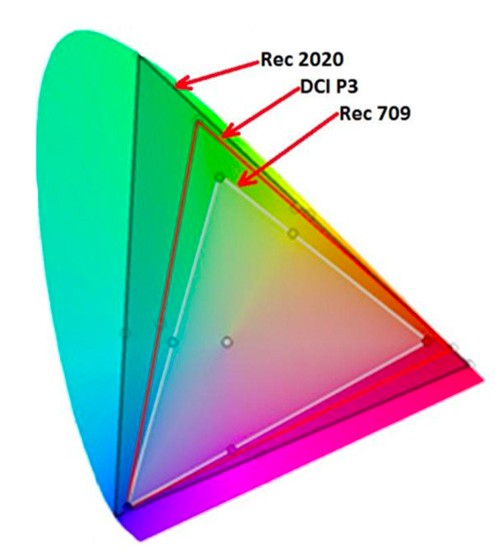

Color gamut

Last of these five parameters is color gamut, or color space; which defines the reach or richness of hues. The CIE 1931 chromaticity diagram (also called color horseshoe) was specifically designed to encompass all colors the average human can see, and different gamut’s encompass various amounts of it, where the common color space called BT.709 (also known as Rec.709) leaves a large set of visible colors that cannot be rendered. Larger color spaces, such as DCI-P3 and BT.2020 can represent a much larger set of visible colors. Related to color gamut is the concept of color volume, which is a relatively new term within the industry. It refers both to high dynamic range and wider color gamut, which are becoming linked within different HDR-standards. There are some confusion around color volume; where a statement can be that “the combination of HDR and a wide color gamut allows for things like a bright blue sky”, which actually refers primarily to HDR, not its combination with a wide color gamut.

The thing is that if you want full 100 nits brightness from an SDR image, then you wont have any color, since all three RGB-channels will be at their maximum value; resulting in white. This is due to the RGB-system being additive. The only way to get back those colors in SDR is to drop the brightness. In HDR, the white level is increased – meaning that it’s now possible to achieve things like a bright blue sky, and also bright yellow fire.

So, color volume is a concept relevant to visualize the brightness range of available colors, and is related primarily to HDR (which moves beyond the limitations of SDR) but also wider color gamuts (which expands the range of hues that’s available).

SMPTE ST.2084 and the PQ-curve

Before starting to work in HDR, it’s important to get familiar with the SMPTE standard ST2084; which ratifies the Perceptual Quantizer – Electro-Optical Transfer Function (also known as the PQ curve) created by Dolby. It allows for a peak luminance of up to 10,000 nits, and requires a signal with a bit depth of at least 10-bits. The PQ curve was developed in relation to how the human visual system interprets the world, which occur in a non-linear fashion. As an example; in a room lit by 50 candles we perceive the change of doubling the amount of candles to 100 as equally large of a step as removing half the candles to 25, therefore; being more sensitive to low light. In PQ, half of the bits are used in the 0-100 nits range, meaning that 10-bit HDR doubles the amount of code values in the SDR range compared to traditional 8-bit video.

An important difference between a gamma curve in SDR and the PQ-curve is their relative vs. absolute nature. The actual output brightness of a code-value in SDR will vary depending on the display and its settings, while in PQ-HDR; a bit-value refers to a specific brightness level output. There's a lot more to be said about the problems with this approach, and how Dolby Vision and HDR10/HDR10+ tries to solved those with static and dynamic metadata. Although, that topic have to be saved for a later time.